Background is a map of the sensory (visual) information encoded at much longer time constant, e.g., much slower changing. In other words, objects that are static or sustained are encoded into the background.

Background image, can be computed per pixel as:

Where is in the range of [0, 1] and controls the time constant. Larger the

faster the background adapts. Generally one should start by setting

to a small number, like 0.001. The adaptive parameter

can be throttled. In presence of high degree of change, or transients,

should be set to zero so that the background does not pick up the transients; otherwise

should gradually increase to 1 for speed up background learning.

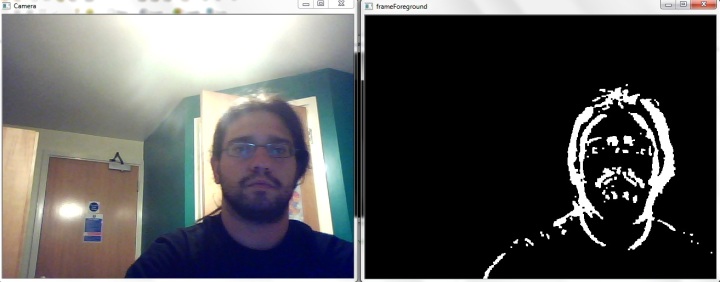

Foreground is the transients components of a scene, defined as the difference between instantaneous image and the background,

. The difference can be by simple subtraction:

Finding Entities in the Foreground

Each foreground entity is evaluated for what it is and where it is. But what constitute an entity? An entity observed from will have a blob or contour caused by the foreground-background subtraction; if a depth sensor is used, the contour would also contain depth discontinuity, which separates foreground from background. In other word, an entity in the foreground is one that has an enclosing contour of depth discontinuity.

Using OpenCV, the foreground can be converted to a binary image using appropriate depth threshold. Then the binary image is cleaned up using morphological operators, such as open. The cleaned binary image is then passed into contour finding routine which will extract multiple contours. Each contour, together with the depth map, amplitude map and color image, can be used for object recognition.

Presence and Absence of Entities

Now the foreground from subtraction can be either presence or absence of entities. Again, if depth map is used, negative foreground means presence, and positive foreground means absence. Note: foreground objects are closer and will have lower depth values.

Encoding the What and the Where

References

(The above article is solely the expressed opinion of the author and does not necessarily reflect the position of his current and past employers and associations)